SRT vs RIST: What's the Difference?

Why do we need SRT and RIST protocol?

In recent years, there have been several waves of audio and video-related trends in the internet industry, such as VR, short videos, and live streaming. While VR is still developing due to technological maturity issues, short videos and live streaming continue to gain popularity and enter new industry applications in various ways. However, the limitations of the popular upstream transmission protocol RTMP are becoming increasingly apparent, such as the FLV container format not supporting new codecs, multiple audio tracks, and low timestamp accuracy. Additionally, RTMP's TCP-based transmission is not suitable for real-time audio and video transmission due to its fair and reliable transmission design.

New upstream transmission methods have emerged in the industry, including SRT, RIST, WebRTC-based streaming, and fmp4-based streaming (DASH-IF Live Media Ingest Protocol).

What is SRT Protocol?

1. Origin and Development of SRT Protocol

The SRT protocol is derived from the UDT protocol, including protocol design and codebase. UDT is a UDP-based file transfer protocol originally designed for high-bandwidth, high-latency scenarios such as long-distance fiber optic transmission to overcome the limitations of TCP. For detailed information about the UDT protocol, refer to "Experiences in Design and Implementation of a High Performance Transport Protocol, Yunhong Gu, Xinwei Hong, and Robert L. Grossman 2004]". Haivision used UDT for streaming media transmission and added optimization features for streaming media scenarios, such as end-to-end fixed latency, to transform it into the SRT protocol. The SRT protocol standard is currently in the draft stage. Haivision open-sourced the libsrt project in 2017 and established the SRT Alliance, which now has over 500 members. Tencent MPS is one of the members of the SRT Alliance.

2. Features of SRT

- Two transmission modes: file transfer mode and live streaming mode. This article mainly focuses on the live streaming mode, which supports both loss and lossless modes.

- SRT can use any streaming media container format as a transport protocol. However, for the loss mode, the container format must have error recovery and resynchronization mechanisms, leaving only the TS format or raw streams such as H.264 and annexb as optional choices.

- Bidirectional transmission: SRT can replace TCP in some scenarios, such as using SRT as the transport layer for RTMP. However, it should be noted that SRT assumes that the bidirectional streams are independent of each other, while RTMP involves bidirectional signaling interaction. RTMP over SRT may have some subtle defects, as explained in detail later.

- Support for ARQ (Automatic Repeat Request) and FEC (Forward Error Correction) error recovery mechanisms.

- Support for connection bonding is currently in the experimental stage.

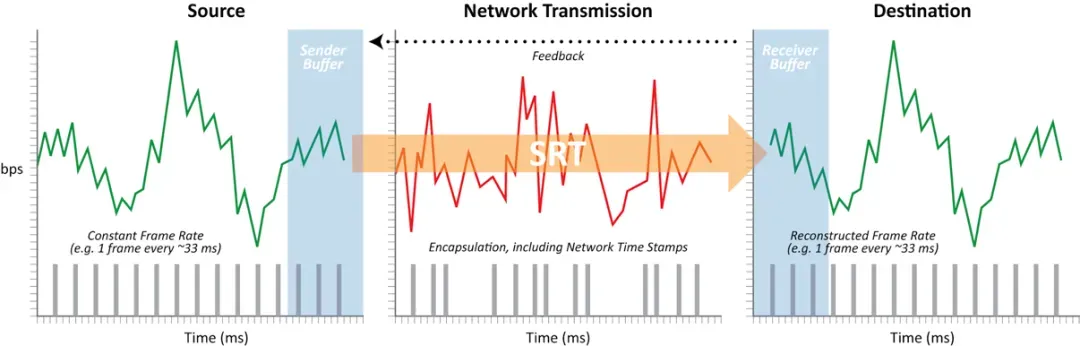

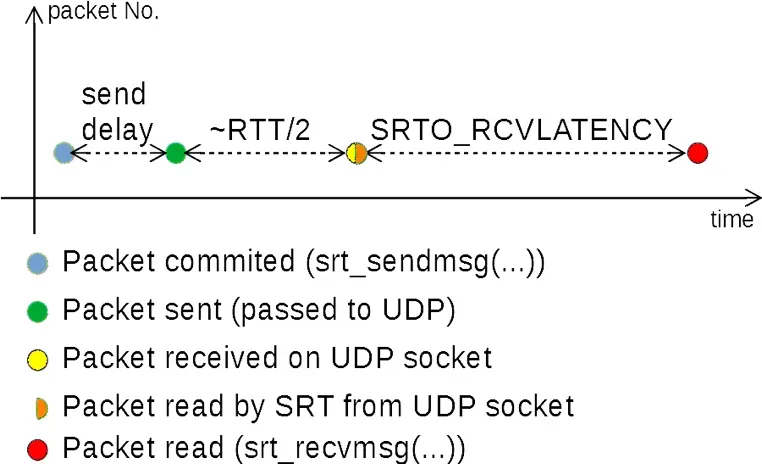

2.1 Fixed end-to-end latency

The most prominent feature of SRT is its fixed end-to-end latency. In general transmission protocols, the time from sending data (send()) to receiving data (receive()) fluctuates. SRT smooths out network jitter and ensures a relatively constant time from srt_sendmsg() to srt_recvmsg(). The srt_sendmsg() function timestamps the data, and the receiving end checks the timestamp to determine when to deliver the data to the upper-layer application. The core idea is not complicated: buffering against jitter and maintaining fixed latency based on timestamps. The implementation also considers time drift issues.

The end-to-end latency is approximately equal to the configured SRTO_RECVLATENCY + 1/2 RTT0, where RTT0 is the RTT calculated during the handshake phase. The configured latency should compensate for RTT fluctuations and take into account the number of packet retransmissions.

The fixed end-to-end latency is controlled by the TSBPD (Timestamp-based packet delivery) switch, and whether packet loss is allowed is controlled by TLPKTDROP (Too late packet drop). The former is influenced by the latter, and only when packet loss is allowed can fixed latency be guaranteed. Otherwise, in poor network conditions, the received packets may exceed the configured latency. When TSBPD and TLPKTDROP are both enabled, timed-out packets are no longer retransmitted because, according to the fixed latency design, they would be discarded by the receiving end even if sent. Fixed latency means that either the data is delivered to the upper-layer application on time or expired packets are passively or actively discarded.

The fixed latency at the transport layer simplifies the buffer design for the receiving end consumer. For example, in a player, there are usually multiple levels of buffering, including buffers within the I/O module or between I/O and demux, as well as buffering queues between demux and rendering. The core buffer module is generally placed after demux and before decoding because it allows for calculating the duration of buffered data. The duration can also be calculated after decoding but the data volume after decoding is large, making it unsuitable for buffering data for a long time and controlling the buffer level. By achieving fixed latency buffering in the I/O module, the player can eliminate the buffering modules between I/O and demux, and demux and decoding, and only require simple data management. The multi-level buffering design introduces high latency, and the buffer in the I/O module is only a small part of the entire player buffer, resulting in weak packet loss recovery capability. By handing over the buffer management to the transport module, the packet loss recovery capability is enhanced.

2.2 Resilience to weak network conditions

SRT supports two mechanisms for combating weak networks, ARQ and FEC. Additionally, the broadcast mode in link bonding can also improve data transmission reliability.

2.2.1 ARQ

SRT's ARQ design uses both ACK and NACK mechanisms. The receiver sends ACK in two situations:

- Full ACK is sent every 10 ms.

- Light ACK is sent every 64 packets received.

In addition to the usual ACK and NACK packets, SRT also has ACKACK packets.

- After receiving an ACK, the sender immediately sends an ACKACK without any delay. The time from sending ACK to receiving ACKACK is considered as the RTT.

- Upon receiving ACKACK, the receiver stops retransmitting the same ACK packet; otherwise, it would be sent multiple times.

NACK is sent by the receiver in two situations:

- When there are gaps in the received packet sequence numbers.

- Periodically checking for packet loss and sending NACK, with a time interval of max((RTT+4*RTTVar)/2, 20).

Periodic NACK may result in a packet being retransmitted more than once, but it ensures low latency.

2.2.2 Simulation testing

SRT has strong resistance to random packet loss, but in high packet loss scenarios, it consumes a higher bandwidth. Using FFmpeg as the SRT client and server, various packet loss rates (0%, 10%, 20%, 50%, 70%) were simulated using NetEm to create a weak network environment. The video bitrate was approximately 5 Mbps with a frame rate of 25 fps.

Even with a 70% packet loss, the receiver can still maintain a stable frame rate. On the other hand, at a 20% packet loss rate, the bandwidth usage is already twice the video bitrate. These experimental results represent scenarios with sufficient bandwidth and high random packet loss. In production environments, more adverse scenarios with insufficient available bandwidth need to be considered.

In addition to the fixed latency feature, resistance to random packet loss can also help reduce latency. TCP reduces its sending speed and has slow retransmission when encountering packet loss due to congestion control, resulting in data accumulation at the sender and increased latency. The following graph shows a comparison of latency in a high RTT with 1% random packet loss scenario. Since the server used for testing does not support SRT downstream, only 1% packet loss was simulated upstream.

RTT ranges from 250 ms to 400 ms with significant fluctuations.

Ping:

time=321.291 ms

time=291.814 ms

time=362.499 ms2.3 Congestion control/pacing

SRT file mode has congestion control, while live mode only has pacing control without congestion control. However, libsrt provides rich statistical information, allowing the application layer to adjust video capture and encoding based on the statistics to avoid congestion and buffering issues. From the perspective of real-time performance in a live streaming system, only doing congestion control at the transport layer cannot guarantee low latency and cope with situations where the transmission bandwidth is consistently lower than the video bitrate.

SRT's pacing calculates the packet-sending interval based on the maximum sending bandwidth. The maximum sending bandwidth is determined by three strategies:

- Manually configuring the maximum sending bandwidth (max_bw).

- Calculating max_bw = input_bw * (1 + overhead) based on the input bitrate and overhead.

- Configuring the overhead and automatically estimating the input bandwidth, max_bw = input_bw_estimate * (1 + overhead).

These configurations have priorities. For example, if max_bw is configured, input_bw, overhead, and other configurations are ignored. The combination relationship is shown in the figure below:

| Mode / Variable | MAX_BW | INPUT_BW | OVERHEAD |

| MAXBW_SET | v | - | - |

| INPUTBW_SET | - | v | v |

| INPUTBW_ESTIMATED | - | - | v |

Note: The default configuration for SRT live mode is max_bw 1 Gbps. When the data writing speed to SRT is not uniform enough, a high max_bw value can lead to ineffective pacing control, causing a sudden increase in the packet loss rate. Understanding and properly configuring these three parameters is crucial.

3. SRT Support Status

- Currently, there is only one implementation of the SRT protocol, which is libsrt. Libsrt can function as both a client and a server, supporting both upstream streaming and downstream playback. However, due to issues such as global locks, excessive threads, and high CPU usage in the code implementation, libsrt is not suitable for large-scale downstream playback scenarios. Currently, it is mainly used for upstream streaming to improve the quality of transmission.

- FFmpeg provides libsrt encapsulation with comprehensive option configuration support. However, the FFmpeg avio interface lacks support for statistical information, resulting in the loss of all statistical information from libsrt. It is important to note that FFmpeg's libavformat encapsulation of libsrt does not consider the scenario of bidirectional transmission, resulting in the loss of bidirectional transmission capability.

- VLC supports SRT playback and streaming. It is worth noting that the released VLC 3.0 does not support streamid configuration, only the master development branch supports it.

- OBS supports SRT through FFmpeg's libavformat.

- Gstreamer has libsrt encapsulation.

- Tencent MPS's audio and video SDK and services support RTMP over SRT, allowing customers using RTMP-based solutions to seamlessly switch to this new solution.

4. SRT Future Outlook

SRT has strong resistance to random packet loss, but it consumes a higher bandwidth in high packet loss rate scenarios. It is suitable for low-latency upstream streaming scenarios with sufficient network bandwidth. When the upstream bandwidth is low, SRT's statistical information can be used to dynamically adjust encoding parameters to avoid congestion and buffering. SRT has room for improvement in the following directions:

4.1 Parameter configuration

SRT is targeted at the broadcasting industry, requiring manual testing of network conditions for parameter tuning. The configuration is complex and lacks adaptive features, making it difficult to apply a single set of configurations to cope with changing network environments. Simplifying configuration and adding adaptive strategies are optimization directions for SRT.

For example, the current configuration of latency is based on a time unit. Configuring latency should consider the network's RTT, but RTT is only known at runtime. If RTT can be used as the unit, configuring latency as latency = N * RTT would greatly reduce the configuration difficulty and adapt to different network conditions.

4.2 Congestion control

Currently, SRT lacks congestion control and only has simple methods such as configuring input bitrate and output bandwidth limit. It does not perform well in scenarios where the transmission bandwidth is lower than the video bitrate, the video bitrate changes significantly, or the network is unstable. SRT should have basic congestion control strategies combined with video encoding to cope with congestion.

4.3 Container format

SRT does not limit the container format, but the loss mode relies on a container format with error recovery and resynchronization mechanisms, leaving only TS as a viable option. However, TS has a high overhead in the encapsulation layer due to various factors such as fixed 4-byte TS headers, padding, and empty packets when using fixed bitrates.

For the lossless mode, there are several container format options, including flv, mkv, and fmp4.

- Flv has the advantage of simplicity and compatibility with the old ecosystem but has significant drawbacks, such as not supporting new codecs, low timestamp accuracy, and limitations on audio tracks.

- Mkv, including its variant webm supported by Google, is mainly maintained by the open-source community and lacks commercial support.

- Fmp4 is the most comprehensive in terms of supporting new codecs and is the basis for standards such as DASH, HLS, and CMAF. The application of fmp4 in China has not yet become widespread, but it is expected to receive increasingly broad support.

RTMP over SRT allows traditional RTMP-based upstream SDKs to seamlessly migrate. The advantage is the smooth integration with existing RTMP streaming systems, but there are some additional considerations:

- SRT was not designed to consider the coupling of bidirectional transmission data. RTMP's bidirectional signaling data is coupled, and a known issue is that RTMP handshake packet loss may cause the SRT retransmission mechanism to fail, resulting in RTMP connection failure. This is a known issue/behavior. When a receiver has Periodic NAK reports enabled (SRTO_NAKREPORT), then a sender does not apply any RTO, as it expects to receive the loss report from the receiver. If it is the last packet in transmission, then it will never be retransmitted. The assumption here is that in live mode, you don't normally care about this last packet and just close the connection on the sender's side when you don't have any further source to send. Refer to the GitHub issue "LIVE mode transport deadlock" for more details.

- RTMP code implementations may write many small data packets. TCP can aggregate data, but it is challenging to achieve data aggregation with RTMP over SRT, resulting in the transmission of a large number of small IP packets.

- In unidirectional transmission, the sender's RTT information is brought by the receiver through ACK packets. In bidirectional transmission, both sides can calculate the RTT. However, for RTMP over SRT streaming, after the initial stage, only the upstream has data for transmission, and the client's RTT update mechanism no longer works.

What is RIST Protocol?

1. Features of RIST

The RIST protocol was proposed in 2017 and has released two profiles to date: the simple profile in 2018 and the main profile in 2020.

The simple profile inherits the RTP protocol and is compatible with it. It adds the following to the RFC 3550 RTP protocol:

- NACK: It defines NACK packets in bitmask format as defined in RFC 4585 Extended RTP Profile for Real-time Transport Control Protocol (RTCP)-Based Feedback (RTP/AVPF). It also sends range-based NACK packets based on application-defined RTCP.

- RTT measurement: It sends Echo request and Echo response based on application-defined RTCP to measure RTT.

- Bonding support: The sender sends data through multiple network interfaces in two modes:

- Redundancy mode: It sends duplicate data through multiple network interfaces to increase reliability.

- Round-robin mode: It sends different data through different network interfaces in a round-robin manner to increase bandwidth.

- The receiver performs sorting, deduplication, and other operations on data sent by the same client through a pair of RTP/RTCP ports.

RIST retains the multicast mode of RTP, but currently, it is only suitable for controlled local network environments due to the special nature of multicast.

In addition to the features of the simple profile, the main profile adds the following features:

- Stream multiplexing and tunneling:

- Implemented based on GRE-over-UDP RFC 8086.

- RTP and RTCP share the same transport channel and port.

- Multiple streams can share the same transport channel and port.

- Multiple streams plus user-defined data streams can share the same transport channel and port.

- Encryption:

- DTLS encryption.

- Pre-Shared Key encryption.

- TS empty packet removal.

- High bitrate and high latency support:

- Scenario: Transmitting a 100 Mb/s bitrate TS stream, the RTP sequence number wraps around every 6.9 seconds. When the ARQ retransmission limit is configured as 7, the maximum supported RTT is 1 second. In other words, in scenarios with high bitrates and high latency, the RTP sequence number wraparound limits the number of retransmissions.

- Solution: Use RTP header extension to extend the RTP sequence number to 32 bits.

2. RIST Future Outlook

In the broadcasting industry, RIST and SRT are in a competitive state. In the internet industry, RIST still has the following issues to be resolved:

- The simple profile has session/streamid and port allocation issues:

- RIST follows the RTP approach, using IP and port as the session, which means a server cannot support multiple clients with one or a pair of ports. For each additional client, the server needs to open two new ports. Except for specific restricted networks, large-scale deployment in the public network faces challenges.

- Apart from IP and port as the mapping between clients and servers, the RIST standard does not define other means to uniquely identify clients. Clients cannot pass streamid-like identification information to the server.

- The main profile does not support backward compatibility with the simple profile: When one end is configured as the simple profile and the other end is configured as the main profile, there is no complete set of fallback strategies to automatically downgrade the main profile end to the simple profile.

SRT vs RIST: Which is Right for Your Needs?

SRT and RIST are both protocols designed for reliable video transport over the internet. However, they have some differences:

Origin and Support: SRT was developed and open-sourced by Haivision. It has a large community and is supported by the SRT Alliance, which includes over 450 members. On the other hand, RIST was developed by the Video Services Forum (VSF), a consortium of broadcast industry vendors.

Error Correction: Both protocols use ARQ (Automatic Repeat reQuest) for error correction. However, RIST uses a technique called "Negative Acknowledgement (NACK)" with a retransmission buffer, while SRT uses a hybrid of ARQ and FEC (Forward Error Correction).

Security: SRT includes built-in encryption (AES) to secure the streams, which is optional in RIST.

Compatibility: RIST is designed to be compatible with existing protocols and standards, including RTP (Real-time Transport Protocol). SRT, however, is a newer protocol and may not be as compatible with existing infrastructure.

Latency: Both protocols handle network congestion and packet loss to minimize latency, but they do it in different ways. SRT uses a technique called "too-late packet drop" while RIST uses a combination of packet retransmission and jitter buffer management.

In summary, both SRT and RIST are designed to deliver high-quality video over unreliable networks, but they differ in their approach to error correction, security, compatibility, and latency management. The choice between the two often depends on the specific requirements of the use case.

How to use SRT for streaming on Tencent MPS?

Tencent MPS supports SRT protocol for live streaming, and customer feedback indicates that SRT significantly improves streaming buffering issues compared to traditional RTMP.

1. Assemble independently through splicing rules. For detailed operations, please refer to Splicing Live Streaming URLs.

2. Enter the Tools > Address Generator of the Cloud Streaming Services console, select URL type: Push Address, and select domain name as needed. For detailed operations, please refer to Address Generator.

If you have any questions about our services, don't hesitate to Contact Us.