New Era of Spatial Computing with Apple Vision Pro

What is Vision Pro?

Apple released its first spatial computing device, Vision Pro, on June 5, 2023. It has been pre-released on a large scale in January 2024. It also seamlessly integrates digital content into the real world. Vision Pro can create an infinite canvas, use spatial audio to match the ultra-large screen, break through the limitations of traditional displays, bring users a new 3D interactive experience, and lead the industry into the era of spatial computing.

In order to achieve excellent spatial video effects, Vision Pro uses the MV-HEVC (i.e., multi-view-high-efficiency video coding) standard for encoding. In terms of transmission scheme, it chooses the standard fMP4 in HLS.

Below we discuss in detail how to achieve the complete workflow of encoding MV-HEVC, packaging it into the ISOBMFF (ISO Base Media File Format) container, and distributing it via HLS for playback on Vision Pro.

Comparing HEVC and MV-HEVC

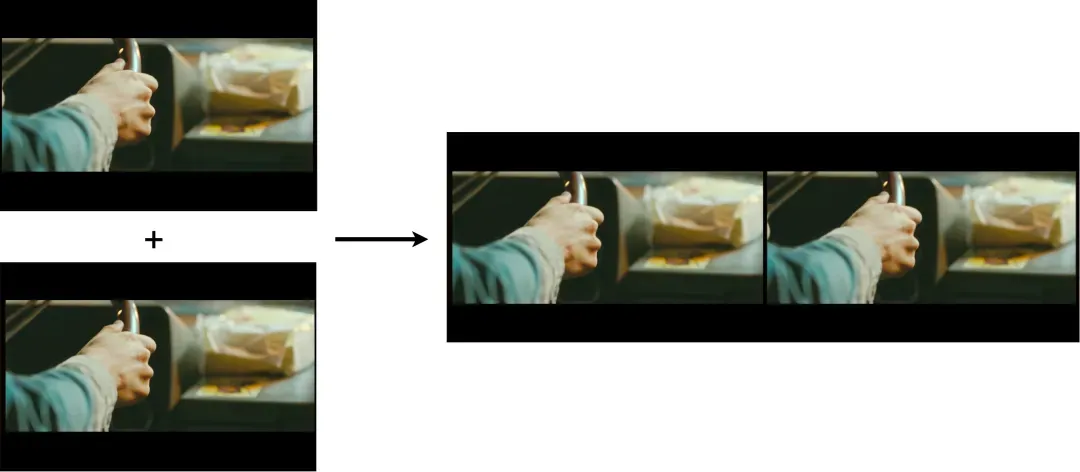

Currently, most of the commonly used 3D video content is based on the encoding, transmission and display of left and right viewpoint images. Generally, the left viewpoint is called the main viewpoint, and the right viewpoint is called the auxiliary viewpoint. The common practice in the industry is to combine the left and right viewpoints into one frame of 3D video in the form of SBS (side by side), and then encode the merged sequence.

The disadvantage of this solution is that it cannot utilize information between different viewpoints, so it cannot eliminate redundant information between different viewpoints.

Taking the HEVC encoder as an example, the HEVC encoder does not have intra-frame long-range search, so the left and right halves of the same frame cannot predict each other; on the other hand, due to the limitation of the motion estimation search range, inter-frame prediction cannot use the information between different viewpoints. Information.

As shown in the figure below, the motion vector of inter-frame prediction is depicted. It can be seen that there is no inter-frame prediction across different viewpoints, and the redundant information of different viewpoints has not been eliminated.

Therefore, if the information redundancy between the left and right viewpoints of 3D video can be eliminated, the efficiency of the encoder will be greatly improved.

In response to the new features of 3D video, especially multi-viewpoint spliced 3D video, the JCT-3V standard expert group was established and in 2014 published an HEVC coding standard extension suitable for 3D multi-viewpoint video coding: MV-HEVC.

In the MV-HEVC (MultiView-HEVC) standard, a new syntax element is introduced in the NALU header: LayerId. It indicates the viewpoint number to which the NALU-encapsulated frame (or Slice) belongs. In 3D videos, we usually use LayerId 0 to indicate that the frame belongs to the left view (main view), and LayerId 1 to indicate that it belongs to the right view (auxiliary view). We call frames that belong to the same POC but different LayerIds an AU. The reference rules for main-view image coding follow the basic HEVC, and each frame of the auxiliary-view image is encoded on top of the basic HEVC, with an additional inter-view reference frame, that is, the frame with the same main view point and POC. Such a reference structure provides the possibility of reference between viewpoints.

The figure below shows the motion vector diagram of right-view inter-frame prediction of a 3D video encoded in accordance with the MV-HEVC standard. It can be seen that the right view uses a large number of inter-view reference modes, fully eliminating redundant information between views.

Extending MV-HEVC using the ISOBMFF standard

Ordinary ISOBMFF videos use the Sample Description Box to store decoder parameter information. For example, the HEVC stream is the HEVC Decoder Configuration Record. In the space video scene, we not only need to store the parameter information of the main perspective, but also the parameter information of the auxiliary perspective. This is used to achieve distribution support for space videos.

The ISOBMFF standard adds a new L-HEVC Decoder Configuration Record that stores auxiliary perspective parameter information. The specific structure is as follows:

aligned(8) class LHEVCDecoderConfigurationRecord {

unsigned int(8) configurationVersion = 1;

bit(4) reserved = ‘1111’b;

unsigned int(12) min_spatial_segmentation_idc;

bit(6) reserved = ‘111111’b;

unsigned int(2) parallelismType;

bit(2) reserved = ‘11’b;

bit(3) numTemporalLayers;

bit(1) temporalIdNested;

unsigned int(2) lengthSizeMinusOne;

unsigned int(8) numOfArrays;

for (j=0; j < numOfArrays; j++) {

unsigned bit(1) array_completeness;

bit(1) reserved = 0;

unsigned int(6) NAL_unit_type;

unsigned int(16) numNalus;

for (i=0; i< numNalus; i++) {

unsigned int(16) nalUnitLength;

bit(8*nalUnitLength) nalUnit;

}

}

}In order to indicate that the current HEVC stream is MV-HEVC, compared with ordinary HEVC video, the HEVC Decoder Configuration Record will add an additional SEI information named three_dimensional_reference_displays_info. This SEI is mainly used to save information such as MV-HEVC left and right perspective IDs.

In addition to parameter information, the extension standard also writes a Box named VideoExtendedUsage in the Sample Description Box. The specific structure is shown in the table below. The core member of this Box is the StereoViewInformation Box, a Box that indicates whether there is a left perspective and a right perspective. Both exist in the spatial video scene.

The above is the level of parameter information. The standard also stipulates that the NALU (Network Abstraction Layer Unit) of the left and right views must be put together as one frame of data, which means they have the same PTS (presentation timestamp) and DTS (decoding timestamp).

Through the above technical points, container packaging support for MV-HEVC has been completed. At the same time, the output ISOBMFF video has backward compatibility, that is, players that do not support MV-HEVC can only parse, decode, and render Base Layer data to 2D Display mode; players that support MV-HEVC can parse, decode, and render Base Layer and Secondary Stereo Layer data at the same time, providing a more realistic and excellent 3D experience.

Distribution support using HLS

The HLS protocol is a long-standing HTTP-based adaptive stream transmission protocol and has been widely used in the field of live video and on-demand video. In order to support the distribution of spatial video, features to support spatial video are also introduced based on the existing HLS standard, as follows:

EXT-X-VERSION is 12;

EXT-X-STREAM-INF in Multivariant Playlist adds a new field: REQ-VIDEO-LAYOUT. When the code stream is MV-HEVC, this field is set to: REQ-VIDEO-LAYOUT=" CH-STEREO"; when it is a normal HEVC stream, this field does not need to appear.

Tencent MPS

Tencent MPS provides cloud transcoding and audio and video processing services for massive multimedia data. Video files in cloud storage can be transcoded on demand into a format suitable for playback on OTT, PC or mobile terminals, meeting the needs of converting video files to different bit rates and resolutions on various platforms. In addition, media processing services such as superimposed watermarks, video screenshots, smart covers, and smart editing are also provided.

For spatial video technology represented by MV-HEVC, its privatized deployment is currently supported, and the public cloud solution will also be launched soon. You are welcome to Contact Us for more information.