Live Event Broadcasting - Ultra-low Latency Live Streaming Solutions

With the popularity of the Internet and the improvement of network infrastructure, more and more people can easily access the Internet and enjoy high-quality video content, providing a foundation for the development of the live streaming industry. The widespread use of smartphones and mobile devices allows users to watch live streaming content anytime and anywhere. The emergence of high real-time live streaming scenarios such as online education, interactive live streaming, and sports events has raised users' demands for smoothness and low latency in live streaming performance. Traditional live streaming technologies have higher latency, which is unacceptable for applications that require real-time interaction. Therefore, ultra-low latency live streaming technology has emerged to meet the demand for real-time performance.

Current Status of Standard Live Streaming

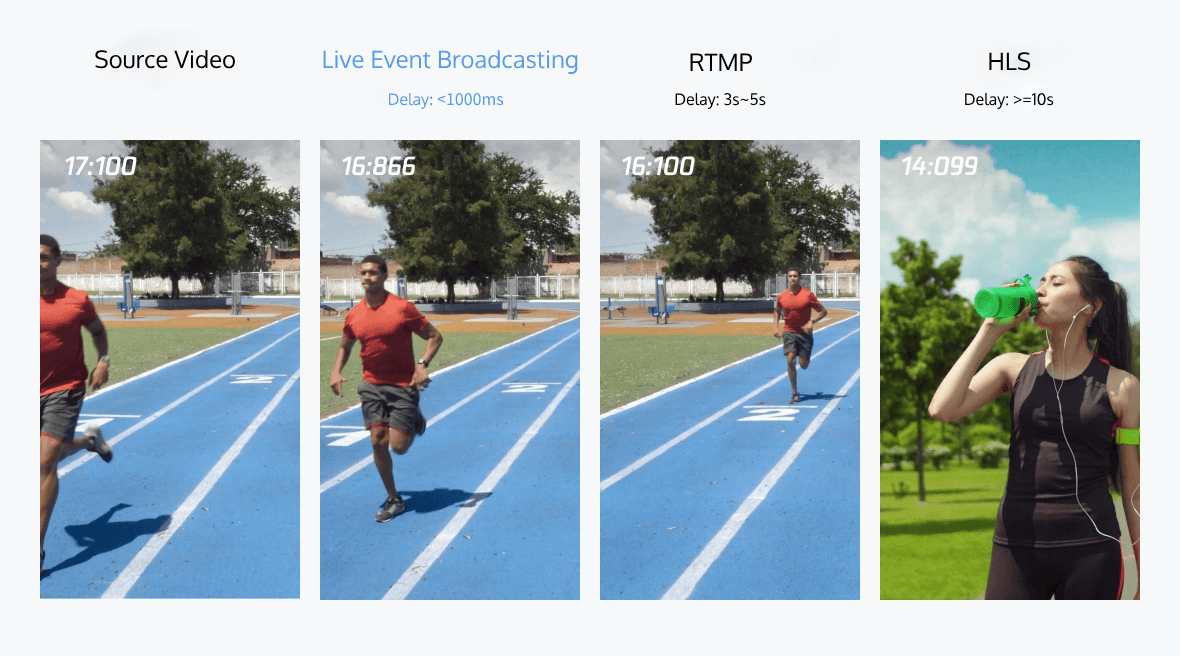

Currently, the majority of the live streaming industry uses standard live streaming protocols such as FLV, HLS, and RTMP. FLV typically has a delay of around 2-10 seconds, with delay factors primarily related to GOP size and TCP congestion in weak network transmission. HLS has even greater delay, ranging from a few seconds to several tens of seconds. Its delay factors are mainly influenced by GOP size and TS (Transport Stream) size. HLS operates through file indexing and downloading, and the size of each file limits its delay. Many players require the download of three TS files before playback, which can result in delays of several tens of seconds. Therefore, HLS has the highest delay among standard live streaming protocols. Additionally, the delay factors for RTMP are also related to GOP size and TCP congestion in weak network transmission.

The aforementioned standard live streaming protocols are all based on TCP. Due to certain issues with TCP, it is not suitable for low-latency live streaming.

Why TCP is not suitable for low latency live streaming?

1. Delayed acknowledgment and piggybacking: TCP's delayed acknowledgment and piggybacking mechanisms introduce perceived latency. TCP does not immediately send an ACK for each received data packet; instead, it waits to accumulate a certain amount of data before sending an ACK. This mechanism increases overall latency, which is unacceptable for ultra-low latency applications where delays are typically in the range of a few hundred milliseconds.

2. Data congestion due to reliable transmission in weak network scenarios: In weak network conditions, TCP's reliable transmission mechanism can lead to data congestion. For example, in a live streaming scenario, when a broadcaster is sending data, it gets buffered because TCP has a reliable queue. In cases where the network conditions are poor, if the sending window has sent all the data but hasn't received an ACK, the window cannot slide forward, resulting in data congestion for real-time transmission. This can even lead to delays of several seconds or tens of seconds.

3. Low channel utilization due to ACK mechanism: TCP's ACK mechanism can also result in low channel utilization. Since TCP needs to wait for ACK confirmation before sending the next batch of data, if there is a high delay or loss in ACKs, the sender cannot fully utilize the network bandwidth, leading to reduced channel utilization.

4. Lack of streaming media features and ineffective retransmissions: TCP does not have built-in streaming media features, and its reliable transmission mechanism can lead to ineffective retransmissions. In live streaming, where timeliness is crucial, ineffective retransmissions can occur, where outdated data is continuously retransmitted until it is successfully transmitted. This further exacerbates congestion.

Considering the above points, it can be concluded that TCP is not suitable for low-latency live streaming.

Live Event Broadcasting solutions

1. What are the key issues with delay?

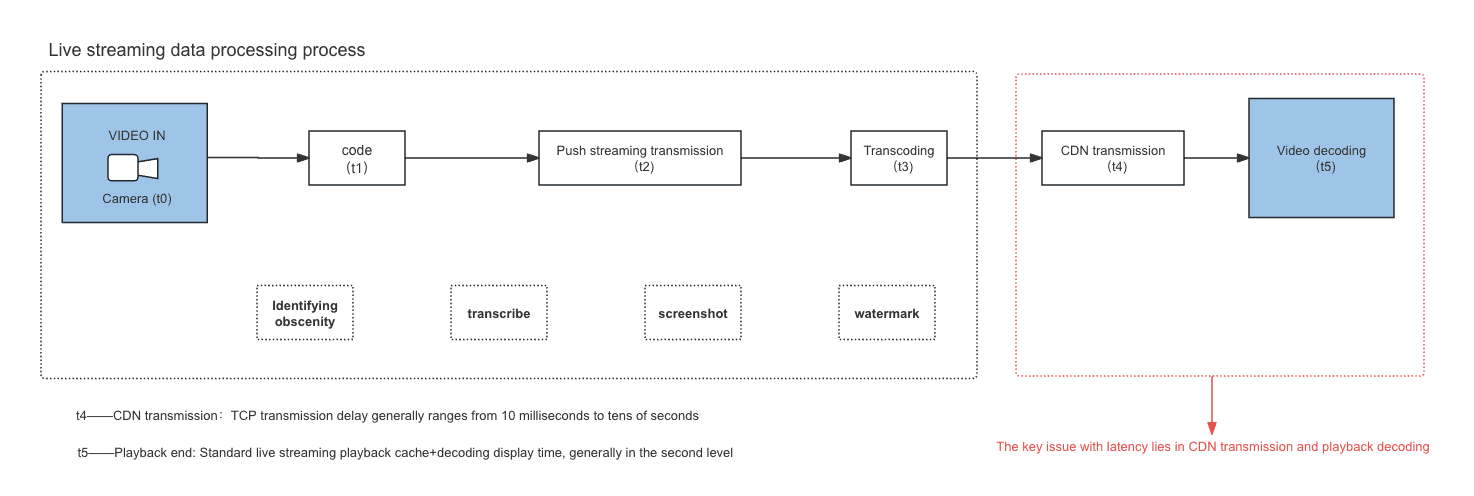

To achieve ultra-low latency, we first need to know where their ultra-low latency occurs? What process does the entire live streaming process go through from data collection to encoding?

Firstly, the data collected by the video input camera is encoded into 264 and 265 data by YUV. The data is collected as t0, encoded as t1, streamed as t2, transcoded as t3, and then transmitted by CDN. After video decoding, it is finally displayed through video. During this process, the camera acquisition time is very small, usually around ten milliseconds; The encoding time can also reach several tens of milliseconds by adjusting the encoding parameters; Push streaming transmission is related to RTP and typically takes between tens of milliseconds to tens of milliseconds; If high-speed transcoding is adopted, the time consumption is not high; The most crucial aspects are CDN transmission and video decoding. The user's network is now diverse, and for users with good network conditions, the transmission delay may not be high. However, for users with poor network conditions, the transmission delay may reach several seconds or even tens of seconds. Therefore, TCP transmission delay is an uncontrollable factor.

During the t5 video playback and decoding phase, currently players like Flash, HLS, and RTMP require 6-10 seconds to cache, and the player's cache is the key reason for the delay. Why not set the cache to 0 under the current live streaming conditions? This is because after adjusting to 0, although the delay is small, the lag will be high. From this, it can be seen that the key to high latency lies in the poor coordination and interaction between CDN transmission and playback decoding. So our main task is to solve this problem.

2. UDP is the important way for low latency live streaming

UDP offers advantages such as lower overhead and reduced latency compared to TCP. However, it is important to note that UDP does not provide built-in reliability or congestion control mechanisms like TCP.

In low-latency live streaming scenarios, where real-time interaction is crucial, UDP can be beneficial due to its reduced latency and the ability to prioritize real-time data over reliability. It allows for faster transmission of data packets without the need for acknowledgments or retransmissions.

However, using UDP for live streaming also comes with challenges. Since UDP does not guarantee reliable delivery, it is susceptible to packet loss and out-of-order delivery. This means that additional mechanisms, such as forward error correction (FEC) or retransmission protocols, may need to be implemented to ensure data integrity.

Furthermore, UDP-based solutions require careful management of congestion control to prevent network congestion and ensure fair bandwidth utilization. This can be achieved through various techniques, such as rate limiting, congestion control algorithms, or adaptive bitrate streaming.

In summary, while UDP is often preferred for low-latency live streaming due to its reduced overhead and latency, it is not the only solution. The choice of protocol depends on the specific requirements of the streaming application and the trade-offs between latency, reliability, and network conditions.

Research shows that there are several protocols used in the industry for low-latency live streaming, including SRT, WebRTC, and ORTC. Among these options, SRT and ORTC are less commonly used, while WebRTC has a thriving ecosystem. Therefore, based on the comparison, we have chosen WebRTC for achieving ultra-low latency in our streaming solution. WebRTC offers millisecond-level latency and provides the necessary streaming media features required for low-latency live streaming.

3. The technology and strategy of Live Event Broadcasting

Live Event Broadcasting solutions typically involve the following key technologies and strategies:

- WebRTC: WebRTC is a real-time communication technology known for its low latency and efficiency. It enables direct audio and video transmission in web browsers without the need for plugins or additional software. WebRTC allows for real-time and low-latency live streaming experiences.

- UDP Transport: UDP (User Datagram Protocol) offers lower latency and higher throughput compared to TCP. By using UDP for transmission, it reduces latency during the streaming process and improves real-time performance.

- Forward Error Correction (FEC): FEC is a redundancy technique that adds extra information during transmission to recover lost packets. By implementing FEC, it reduces the impact of packet loss on streaming quality and latency.

- Adaptive Bitrate Streaming (ABR): ABR adjusts the video bitrate and quality dynamically based on network conditions. By monitoring the available bandwidth and latency in real-time, ABR optimizes the video bitrate to ensure a smooth streaming experience.

- CDN Optimization: Content Delivery Network (CDN) optimization involves caching live streaming content on servers closer to the end-users, reducing transmission latency. Choosing an efficient CDN provider and strategically deploying CDN nodes can improve streaming speed and quality.

- Fast Startup and Player Optimization: Optimizing the startup time of live streams and improving player loading speed are crucial for providing a Live Event Broadcasting experience. By reducing startup time and optimizing player performance, it minimizes user waiting time and enhances real-time streaming capabilities.

By combining these technologies and strategies, it is possible to achieve Live Event Broadcasting solutions that offer low latency and high-quality real-time experiences.

4. Upgrades Standard WebRTC

In addition, LEB has made several upgrades to the standard WebRTC. The standard WebRTC has certain limitations, such as supporting only OPUS for audio, not supporting H.265 for video (due to patent issues and competition between VP9 and H.265, with Google favoring VP9), not supporting B-frames for video, having long signaling interaction delays, and lacking support for metadata passthrough. These limitations are not necessary for online education and live streaming with product promotion, so they can be skipped. However, some clients may require the use of metadata for synchronization purposes, which is not supported by the standard WebRTC.

We have made upgrades to the standard WebRTC in five aspects. These upgrades include supporting AAC (with support for both ADTS and LATM encapsulation) for audio, supporting H.265 and B-frames for video, streamlining signaling interaction through STP negotiation, the ability to disable GTRS (Google Transport Relay Service), and supporting metadata passthrough. Live Event Broadcasting solutions supports H.264, H.265, various audio formats, and encryption settings.

The advantages of Live Event Broadcasting

Ultra-low latency playback in milliseconds

Using the UDP protocol to achieve millisecond-level latency in high-concurrency scenarios between nodes, overcoming the drawbacks of traditional live streaming with 3-5 seconds delay. It ensures excellent core metrics such as instant playback and low buffering rate, providing users with an ultimate ultra-low latency live streaming experience.

Wide coverage of acceleration nodes with high bandwidth capacity

Currently, Live Event Broadcasting has a vast network of super acceleration nodes, covering a wide range of global locations (supporting 2000+ nodes in 25 countries) and capable of supporting over 100T+ bandwidth.

Easy to use

Live Event Broadcasting adopts standard protocols, making integration simple. It allows playback in Chrome and Safari browsers without the need for any plugins.

Excellent network resilience

Live Event Broadcasting ensures high-quality video streaming even in weak network conditions such as high packet loss and latency. It provides users with a more stable live streaming experience.

Seamless multi-bitrate switching

Smooth transition between different transcoded streams without interruptions or abrupt changes, ensuring a seamless visual and auditory experience.

Adaptive bitrate control

Automatically adjusts the bitrate based on network bandwidth, ensuring smooth playback in varying network conditions.

Application scenarios for Live Event Broadcasting

Sports Events

LEB offers live video streaming with ultra-low latency for sports events. The viewers can enjoy watching sports events and get the event results in real time.

Live Shopping

LEB delivers ultra-low latency which can well meet the requirements of live shopping scenarios such as auctions and sales promotions. LEB enables the host and viewers to get timely transaction feedback and allows viewers to easily purchase products while watching.

Online Education

LEB can be used to create an online classroom with ultra-low latency. LEB allows both teachers and students to broadcast live video images in real time, making online classrooms just like face-to-face learning.

Live Q&A

Due to latency in traditional live Q&A, frames need to be inserted on the viewer clients so that the host and viewers can see the same screen at the same time. Ultra-low latency LEB is a perfect solution for this problem. It ensures both sides can see the same screen in real time, thus achieving smooth live Q&A.

Interactions in Live Shows

LEB is suitable for live shows as it provides optimal user experience in giving gifts and other interactive features which require low latency.

Conclusion

In this article, we have discussed the ultra-low latency live streaming solutions. By utilizing WebRTC, we can achieve nearly real-time live streaming experiences. This solution is crucial for applications that require real-time interaction and instant feedback, such as online gaming, sports events, and online education.

With the use of Live Event Broadcasting, we can provide a better user experience by reducing latency and buffering, allowing viewers to interact with content more quickly. Additionally, this solution offers higher reliability and stability, ensuring the continuity and quality of live streaming content.

However, Live Event Broadcasting also faces challenges such as network stability, bandwidth requirements, and device compatibility. To fully leverage the advantages of this solution, we need to consider these factors comprehensively and take appropriate measures to optimize and improve.

In conclusion, Live Event Broadcasting provides essential technical support for real-time interaction and instant feedback applications. With ongoing technological advancements and improvements, we can expect better user experiences and broader application scenarios.

At present, we have a demo available for Live Event Broadcasting. We cordially invite everyone to explore this experience and feel free to contact us for more information.