Audio/Video Enhancement Integration

Last updated: 2025-06-19 16:33:12Download PDF

I. Audio/Video Enhancement Feature Overview

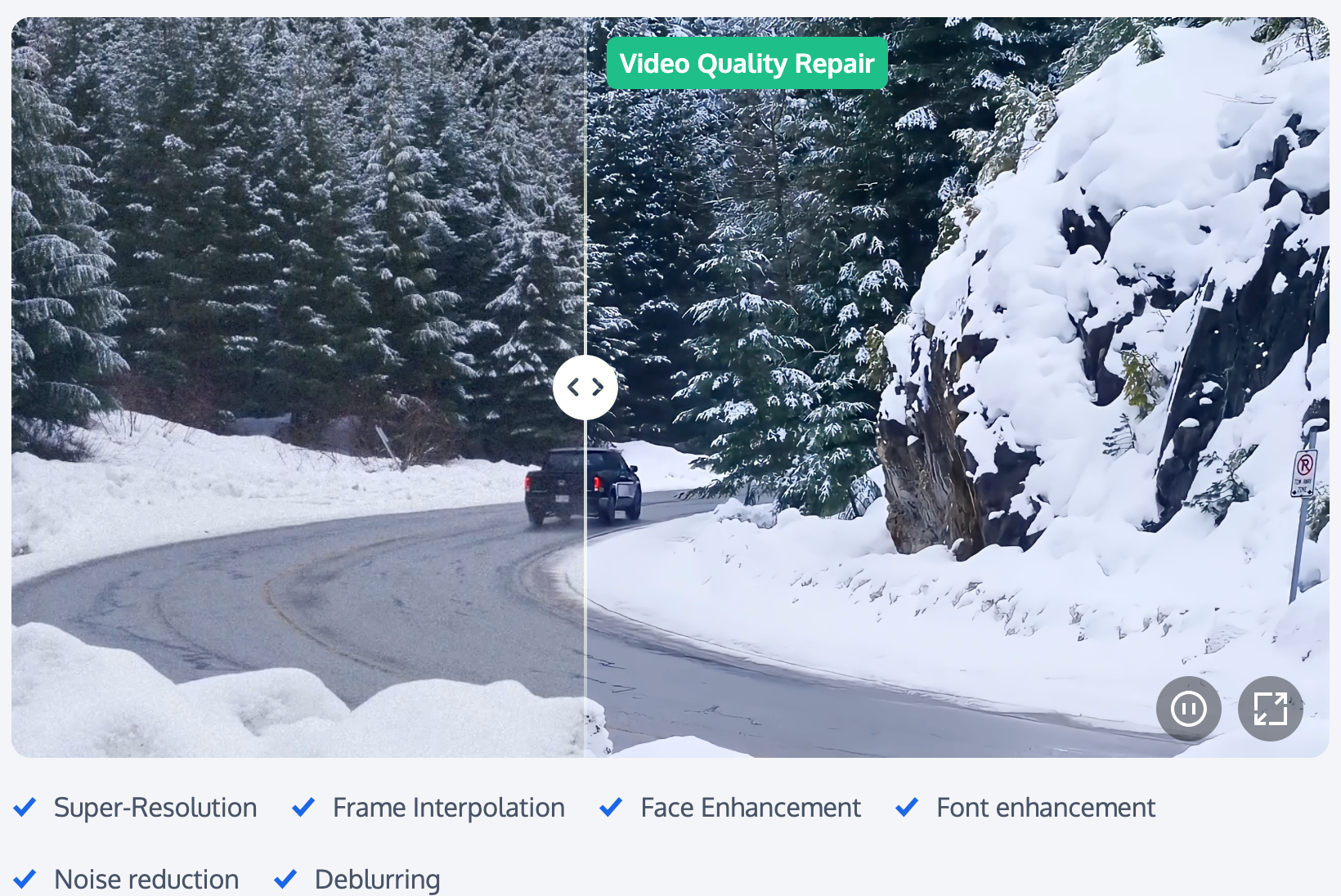

Overview

The audio/video enhancement feature relies on the leading audio/video AI processing models of MPS and its rich accumulation of business data to provide professional-level audio/video enhancement solutions. This feature supports distributed real-time image quality enhancement, such as video artifacts removal, denoising, color enhancement, detail enhancement, face enhancement, SDR2HDR, and large model enhancement, which can significantly improve the quality of audio and video. It is widely used in OTT, e-commerce, sports events, and other scenarios, effectively achieving dual-dimensional improvement in QoE and QoS, creating significant business value.

Technical Strengths

Full-scenario AI enhancement algorithm: It is an industry-leading AI enhancement algorithm customized for different scenarios such as gaming, UGC content, PGC high-definition films and TV shows, online education, live shows, e-commerce, and old film sources, comprehensively improving audio and video quality.

Comprehensive audio enhancement: It supports voice denoising, audio separation, audio quality enhancement, and volume equalization, significantly improving audio clarity and quality, meeting the demand for high-quality audio in various scenarios.

Note:

How to Use the Audio/Video Enhancement Feature

(1) Precautions Before Use

Before using the audio/video enhancement feature, you need to complete the following preliminary operations: registration/login for a Tencent Cloud account; activation and authorization of the COS service. For a specific operation guide, see Getting Started. For account authorization issues, see the Account Authorization documentation.

(2) Creating Audio/Video Enhancement Tasks

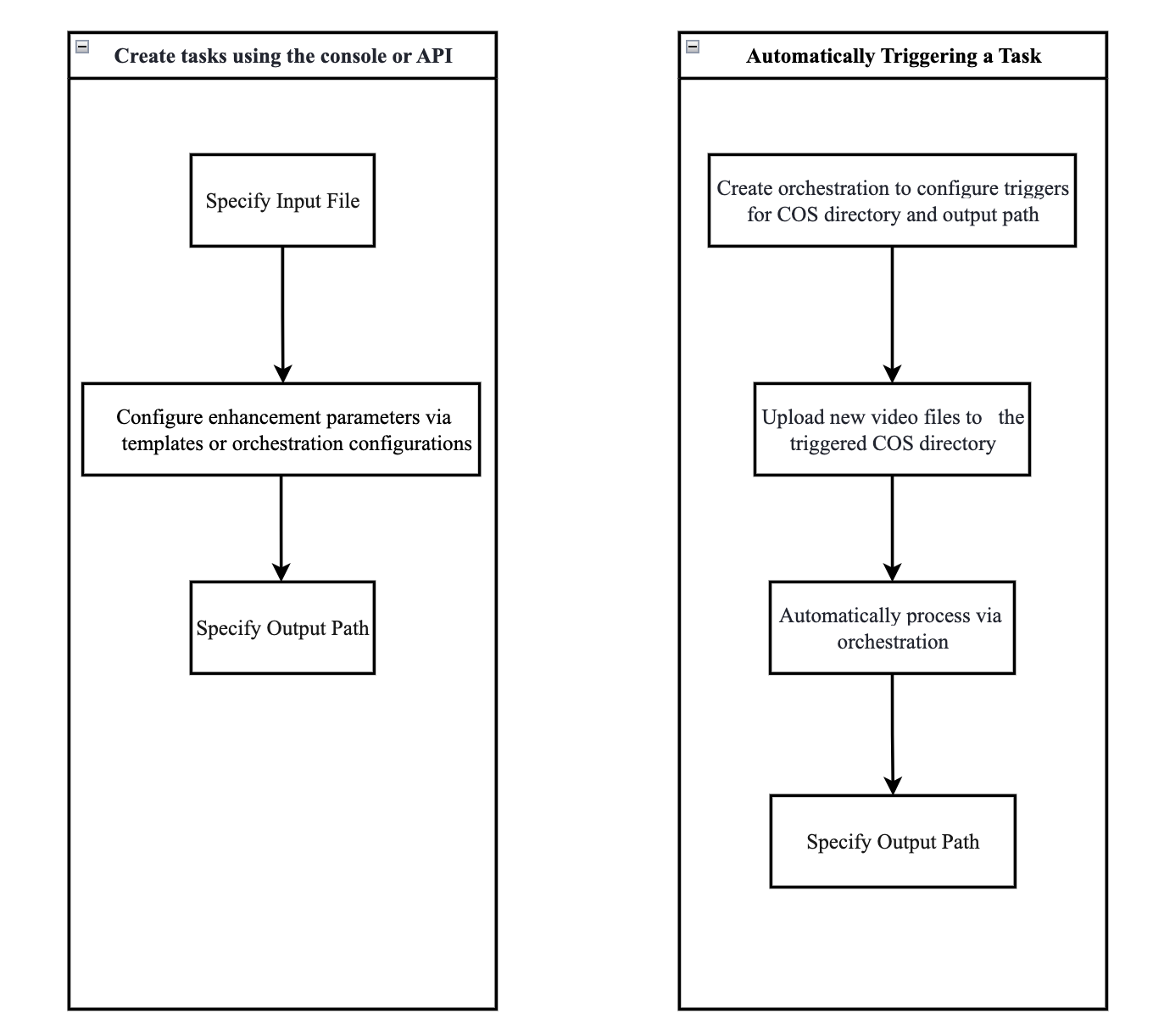

Tencent Cloud Media Processing Service (MPS) provides three task initiation methods: quickly creating tasks through the console, initiating tasks through the API, and automatically triggering tasks. The following flowchart shows the general operation process for each task initiation method. To learn about the specific configuration methods for audio/video enhancement tasks, see the detailed instructions in the Creating Audio/Video Enhancement Tasks section below.

II. Creating Audio/Video Enhancement Tasks

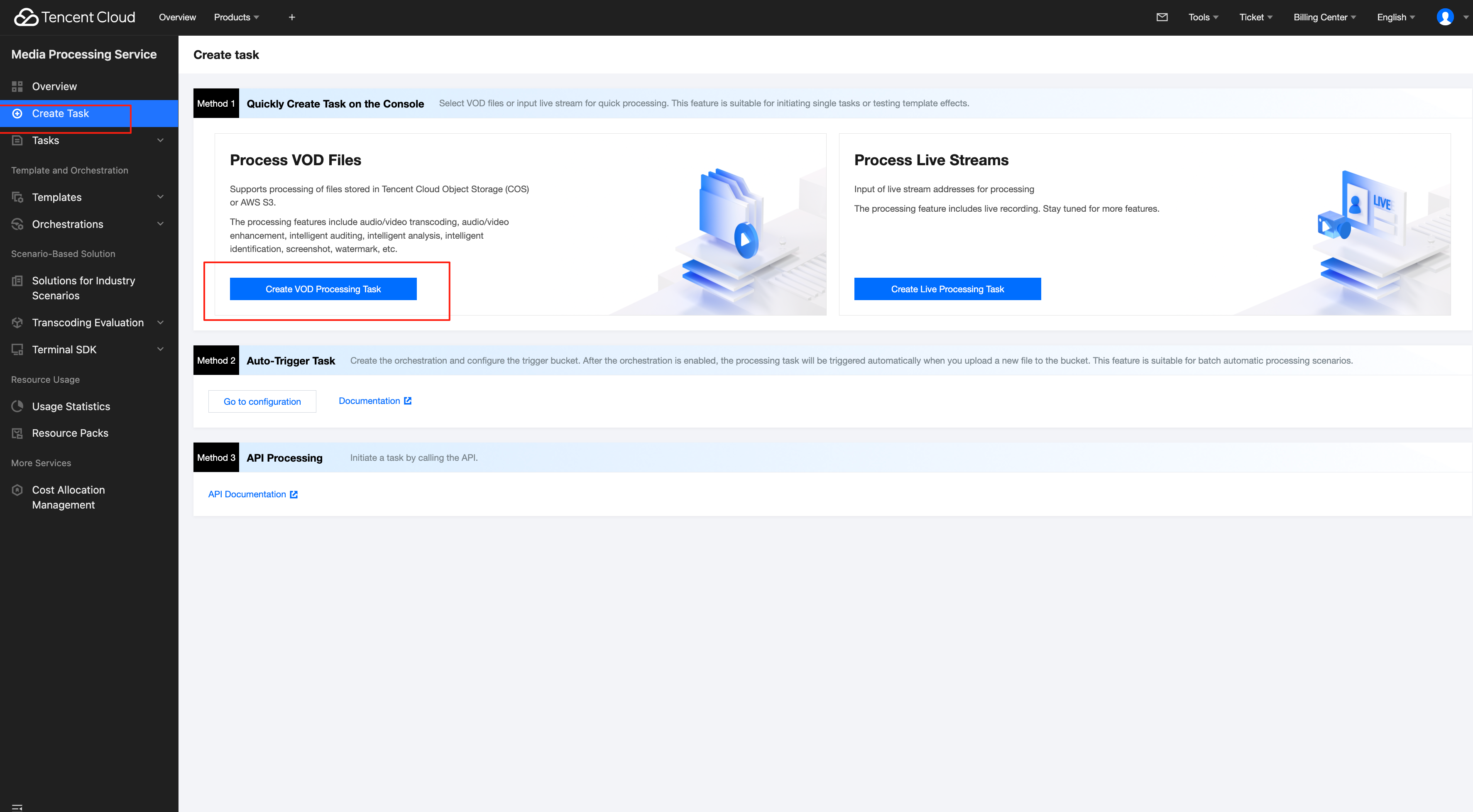

Method 1. Quickly Creating Tasks Through the Console

1. Go to the Media Processing Service console, and then click Create Task > Create VOD Processing Task.

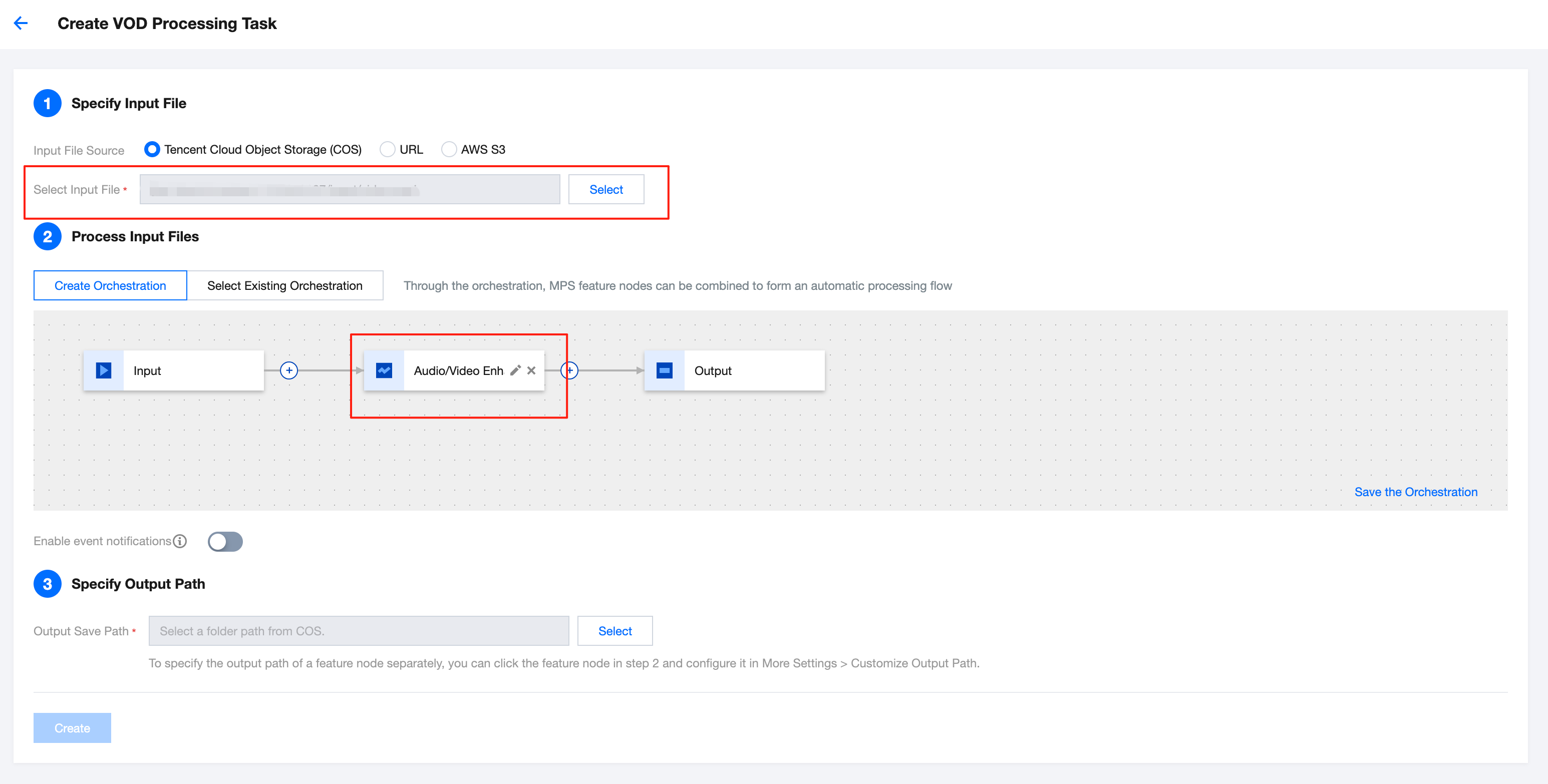

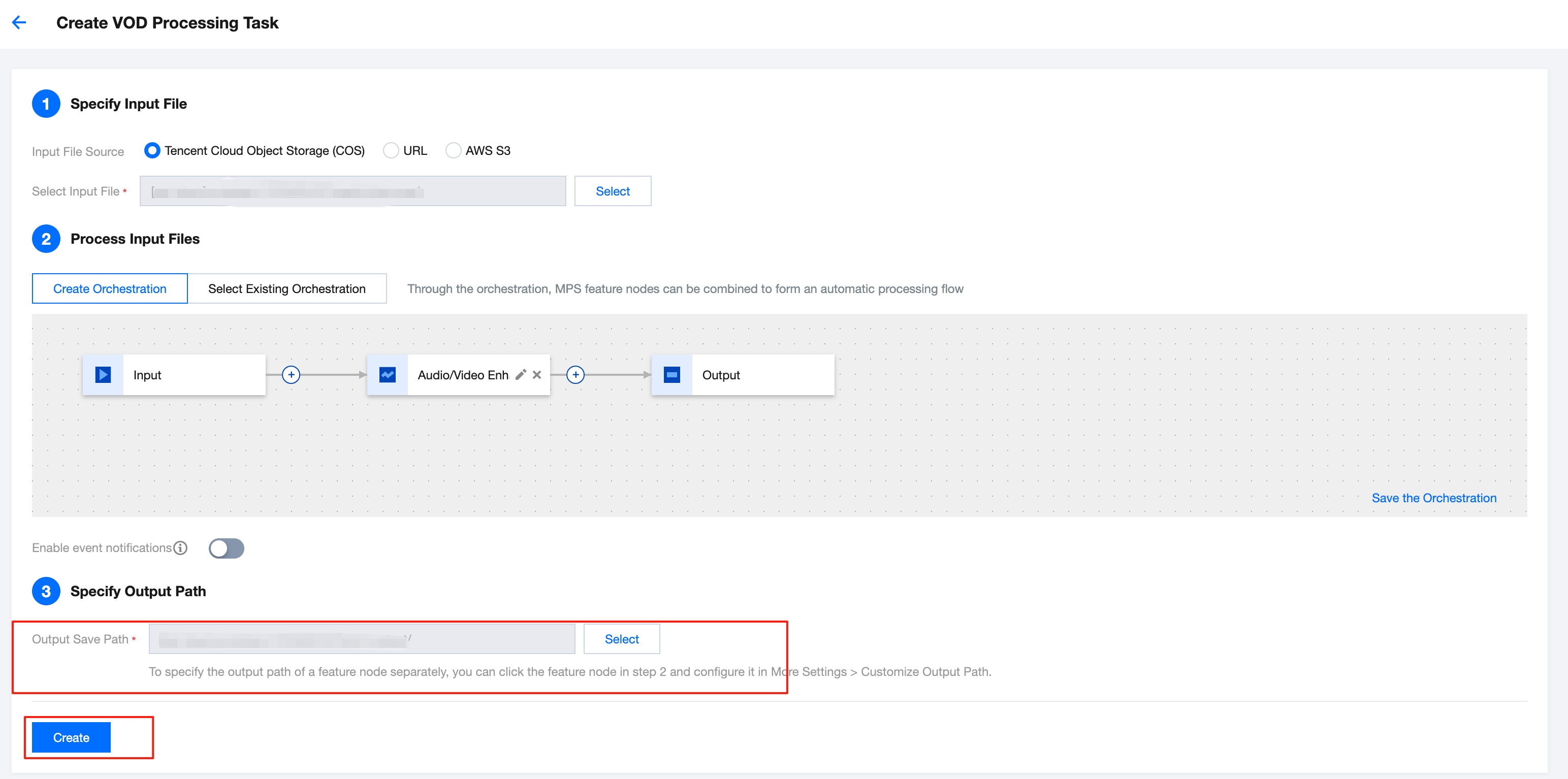

2. First, specify the input video file. You can select audio/video files from a COS or AWS S3 bucket, or provide a file download URL.

3. Then, in the step Select Input File, add a Audio/Video Enhancement node.

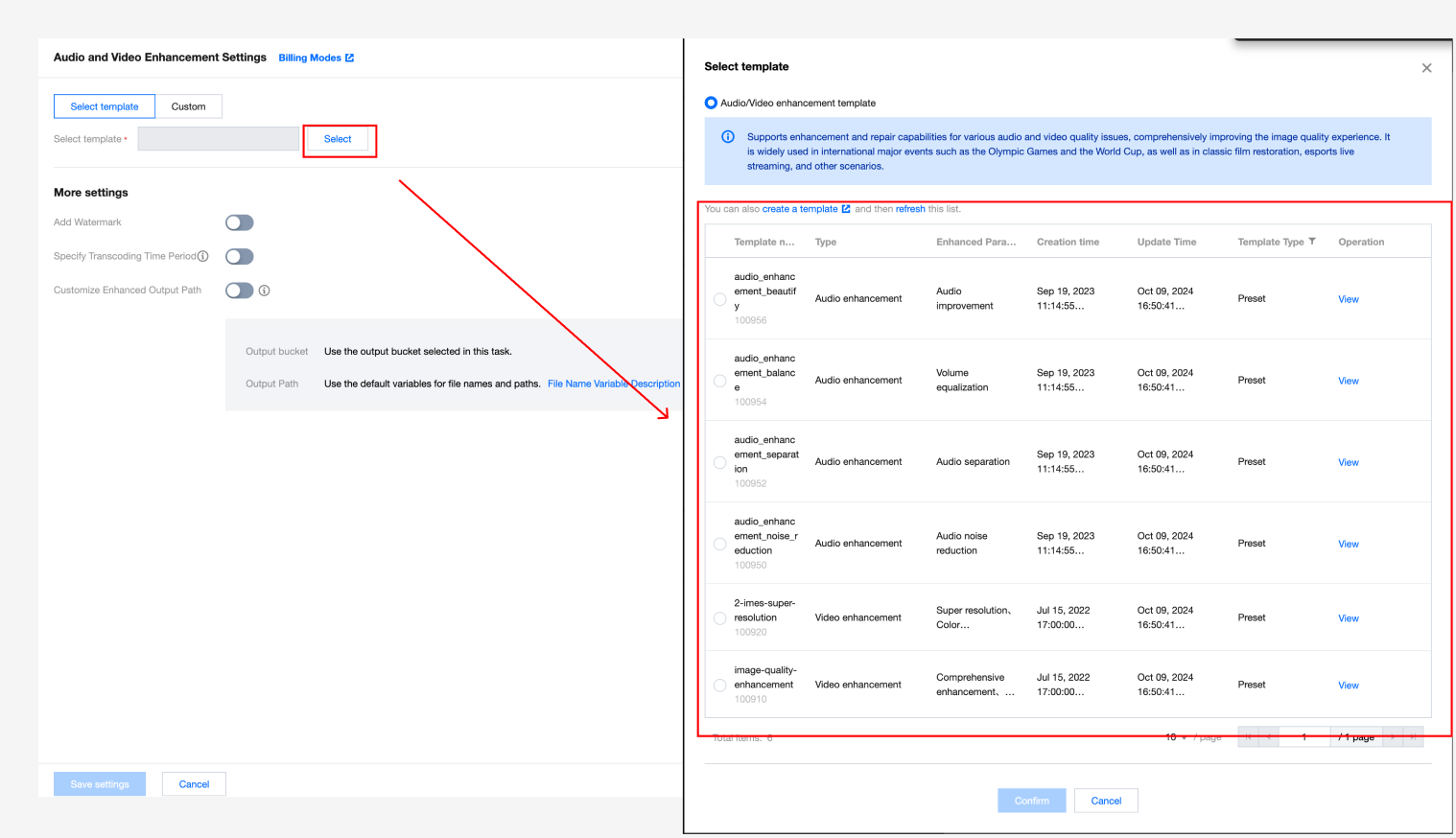

4. In the Audio/Video Enhancement Settings pop-up window, select the required audio/video enhancement template.

Note:

The audio/video enhancement template in the console does not yet support detailed transcoding parameter configurations such as transcoding type, bitrate, and GOP (by default, standard transcoding is used, and parameters such as the bitrate and GOP are set to default values, which are usually source-following or automatic). Therefore, if you need to adjust transcoding-related parameters, it is recommended to use the API-based method to add an enhancement template. Click View Guide for details.

5. Finally, after specifying the output video storage path, click Create to initiate the task.

Method 2. Initiating a Task via the API

Method (1): Call the ProcessMedia API and initiate a task by specifying the Template ID. Example:

Note:

The audio/video enhancement template in the console does not yet support detailed transcoding parameter configurations such as transcoding type, bitrate, and GOP (by default, standard transcoding is used, and parameters such as the bitrate and GOP are set to default values, which are usually source-following or automatic). Therefore, if you need to adjust transcoding-related parameters, it is recommended to use the API-based method to add an enhancement template. Click View Guide for details.

{"InputInfo": {"Type": "URL ","UrlInputInfo": {"Url": "https://test-1234567.cos.ap-guangzhou.myqcloud.com/video/test.mp4"// Replace it with the video URL to be processed.}},"OutputStorage": {"Type": "cos","CosOutputStorage": {"Bucket": "test-1234567","Region": "ap-guangzhou"}},"MediaProcessTask": {"TranscodeTaskSet": [{"Definition": 100910 //100910 is the preset template ID for video enhancement - comprehensive enhancement, color enhancement, and artifacts removal. It can be replaced with your custom audio/video enhancement template ID.}]}}

Method (2): Call the ProcessMedia API and initiate a task by specifying the Service Orchestration ID. Example:

{"InputInfo": {"Type": "URL ","UrlInputInfo": {"Url": "https://test-1234567.cos.ap-guangzhou.myqcloud.com/video/test.mp4"// Replace it with the video URL to be processed.}},"OutputStorage": {"Type": "cos","CosOutputStorage": {"Bucket": "test-1234567","Region": "ap-guangzhou"}},"OutputDir": "/output/","ScheduleId": 12345 //Replace it with a custom orchestration ID. 12345 is a sample code and has no practical significance.}

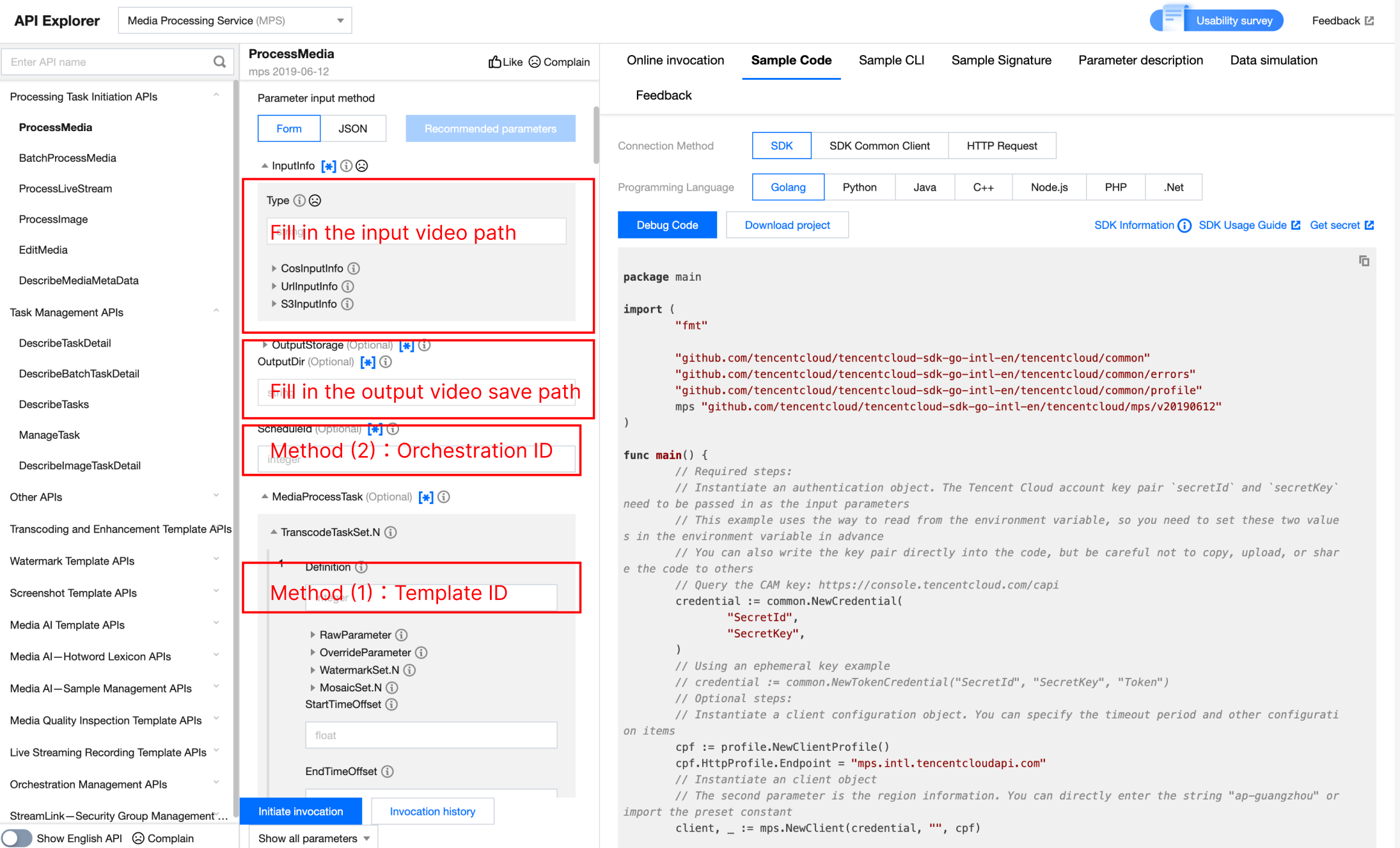

API Explorer quick verification

1.1 First, go to the Media Processing Service console to activate the service and confirm that COS authorization has been completed.

1.2 Then, enter the online debugging page of the MPS API Explorer, and select the ProcessMedia API from the API list on the left. See the figure below to fill in parameters such as the input path, output path, template ID, and orchestration ID, and then you can initiate the online API call.

Method 3. Automatically Triggering an MPS Task After a File Is Uploaded to COS

If you want to upload a video file to the COS bucket and achieve automatic audio/video enhancement according to preset parameters, you can:

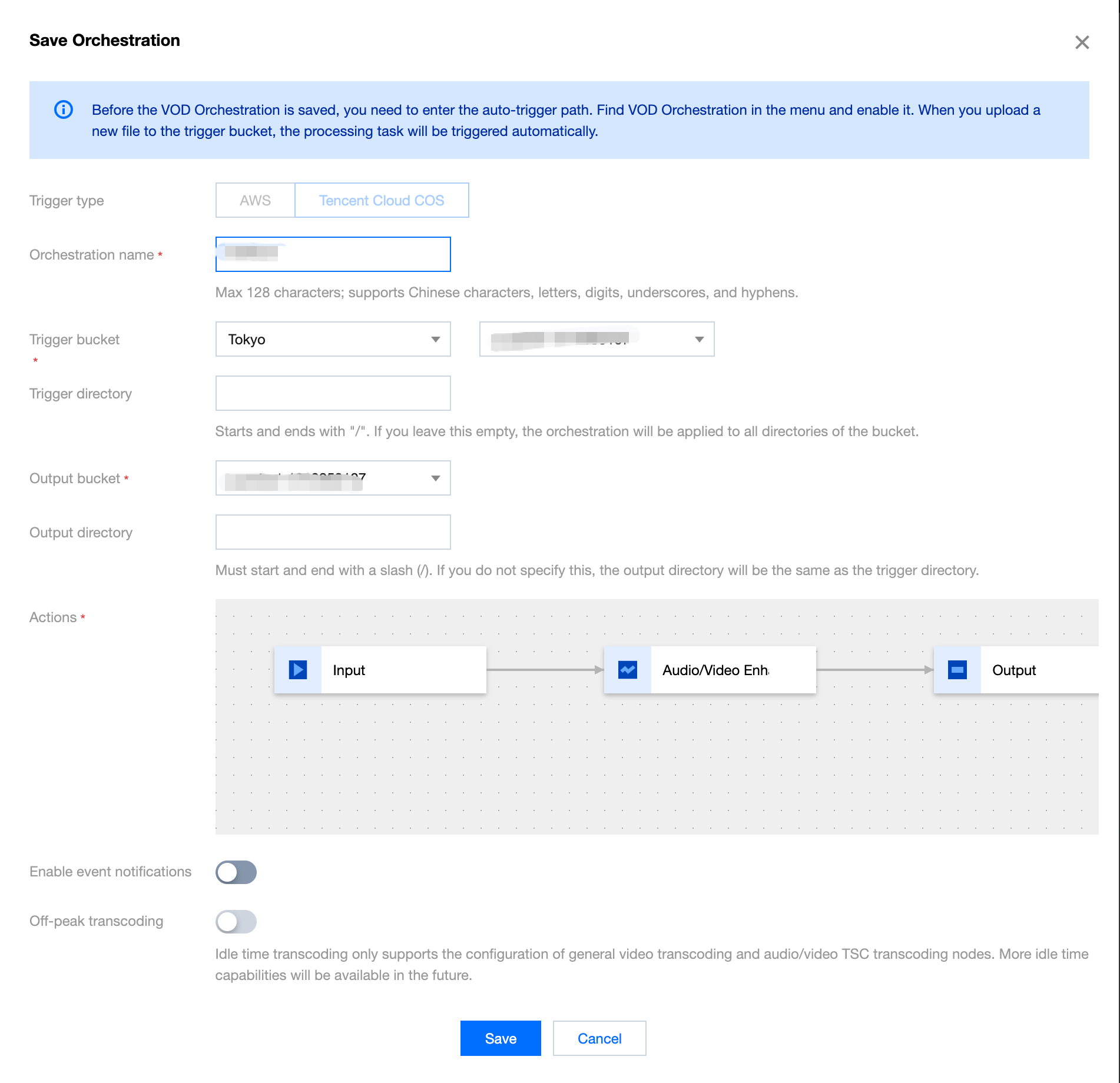

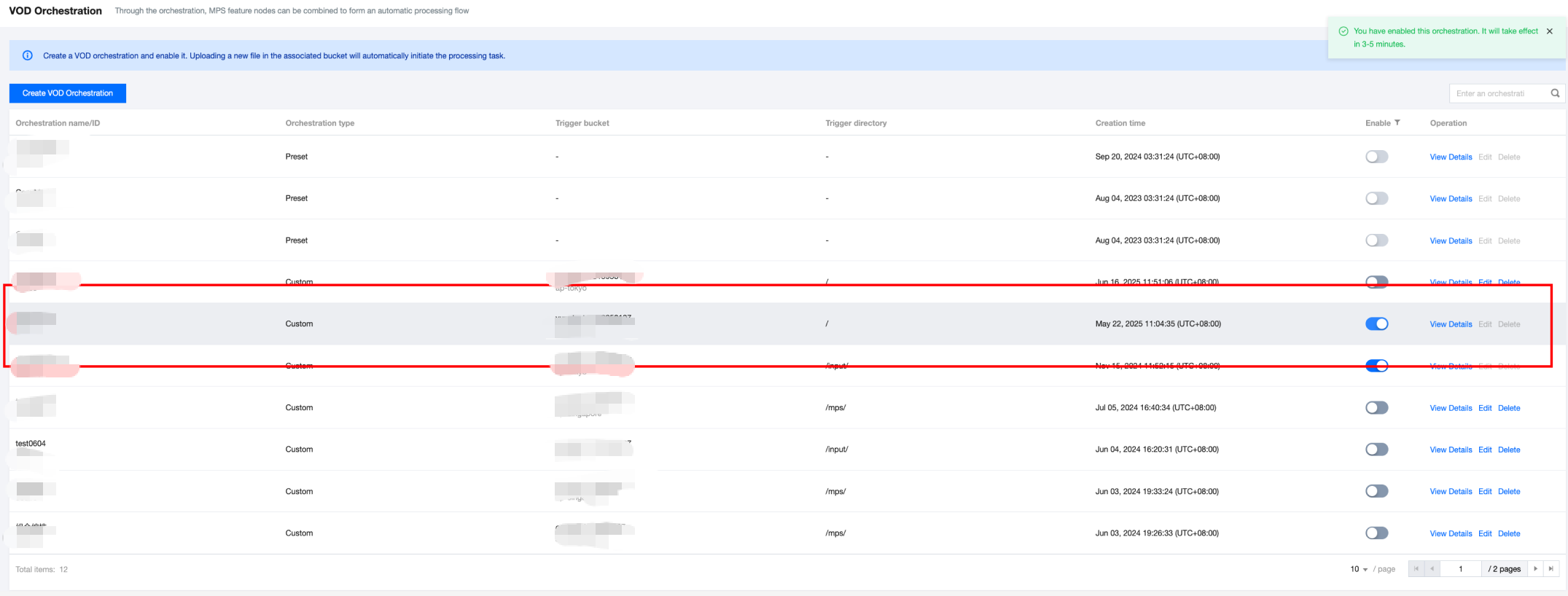

1. Click Save the Orchestration when creating a task, and configure parameters such as the triggered bucket and triggered directory in the pop-up window.

2. Then, go to the VOD Orchestration list, find the new orchestration, and enable the switch at Startup. Subsequently, any video files added to the triggered directory will automatically initiate tasks according to the preset process and parameters of the orchestration, and the processed video files will be saved to the output path configured in the orchestration.

Note:

It takes 3-5 minutes for the orchestration to take effect after being enabled.

III. Querying Task Results

1.Task Callback

When initiating an MPS task using ProcessMedia, you can set callback information through the

TaskNotifyConfig parameter. After the task processing is completed, the task result will be callback through the configured callback information. You can parse the event notification result through ParseNotification.2.Querying Task Results

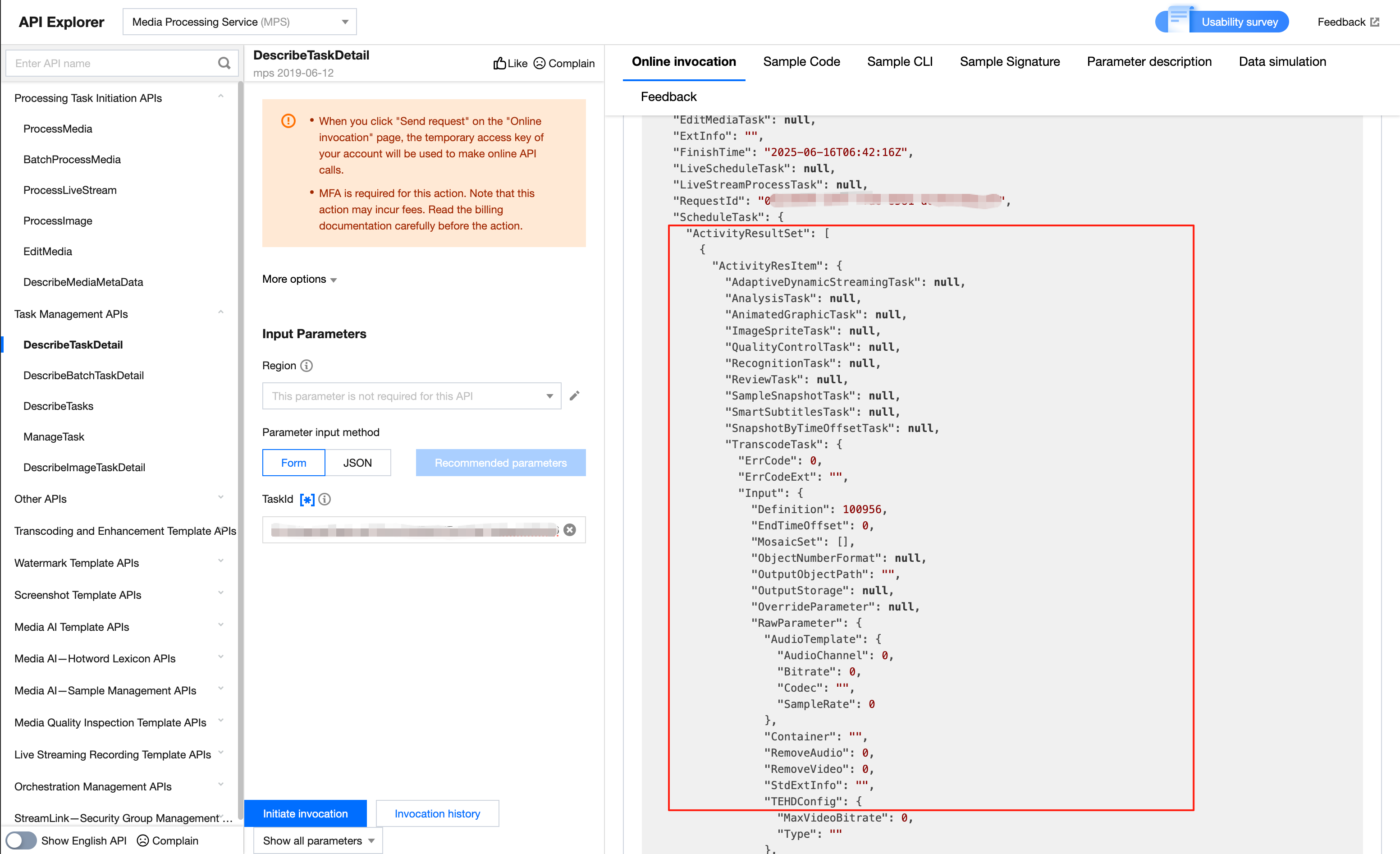

(1) Querying Task Results by Calling the DescribeTaskDetail API

Call the DescribeTaskDetail API and enter the task ID (for example, 24000022-ScheduleTask-774f101xxxxxxx1tt110253) to query the task result.

(2) Querying Task Results in the Console

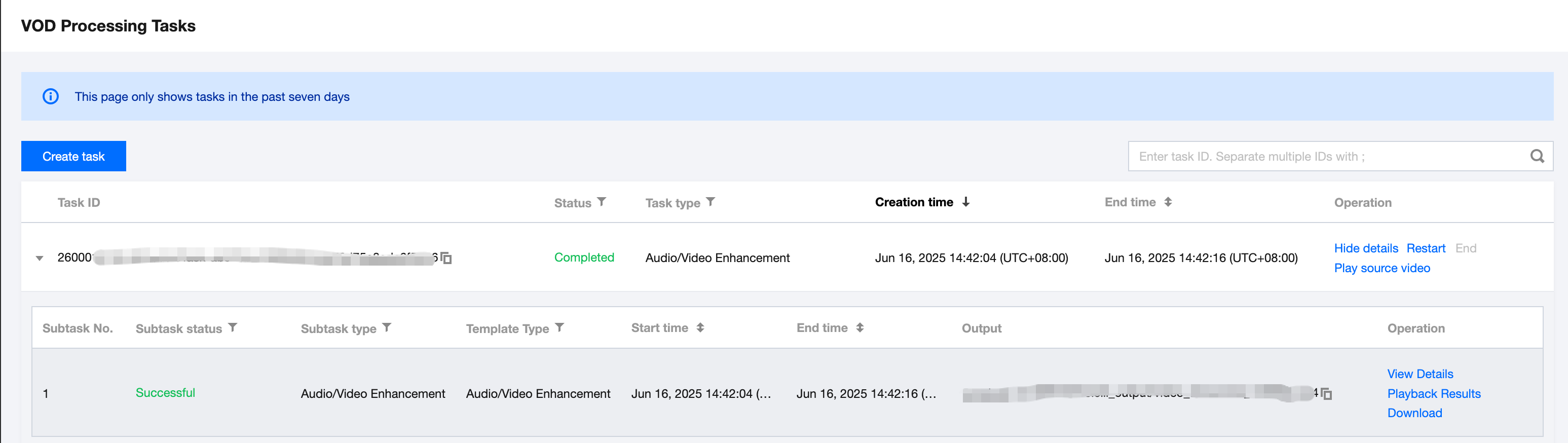

1. Go to the VOD Processing Tasks console, and the task you just initiated will be listed in the task list.

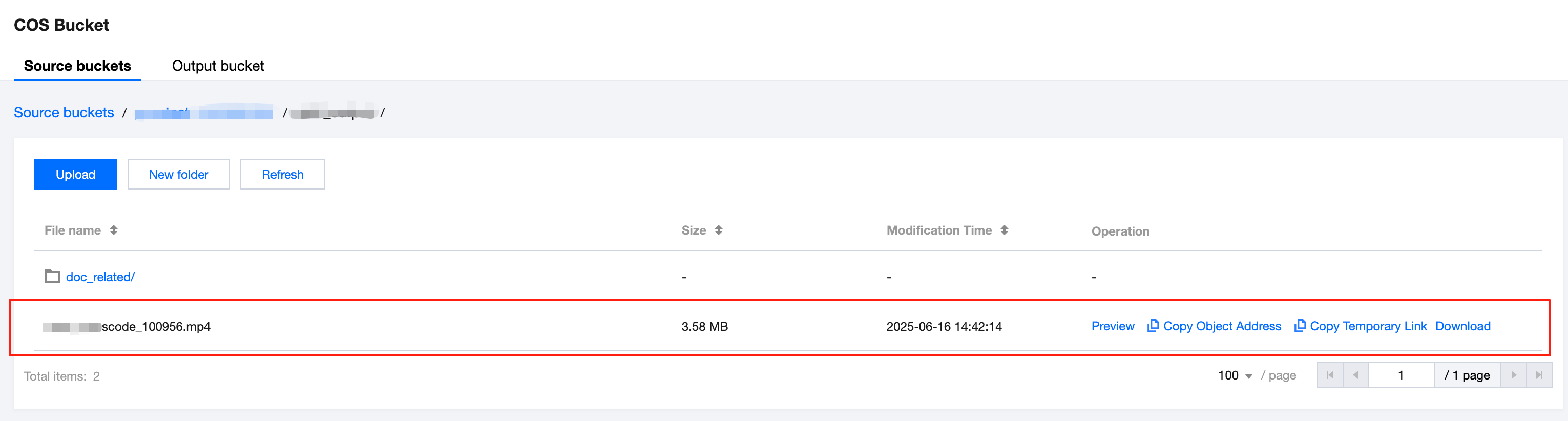

2. When the subtask is in the "Successful" status, you can go to COS Bucket > Output Bucket, find your output location, and then preview or download the audio/video enhancement output files.

IV. Additional Parameters Related to Audio/Video Enhancement

Some latest models are not yet available for configuration. If you need to use them, contact us for backend configuration. The following features are included:

Feature | Description |

Comprehensive enhancement (optimized version) | The comprehensive enhancement technology analyzes and optimizes the content in videos through AI algorithms, with a particular focus on improving the clarity, details, and color performance of faces. It provides higher display quality and refined details from beginning to end, making facial features clearer and more detailed. |

Color enhancement (optimized version) | The color enhancement feature aims to improve the color performance of videos, making the visuals closer to real-life colors while moderately enhancing them to align with human visual preferences. By adjusting color saturation, contrast, and brightness, it corrects color distortions caused by capturing devices or storage issues, thereby improving the overall visual quality of videos. This feature can significantly boost color fidelity, rendering the footage more vibrant and visually appealing. |

Artifacts removal (optimized version) | The artifacts removal feature is primarily used to repair distortions introduced during video transcoding or multiple rounds of transcoding, such as the block effect and ringing effect. These distortions typically manifest as jagged edges, blurriness, or unnatural colors in the image, severely impacting the visual effect. The artifacts removal technology removes these artifacts intelligently by analyzing the video's encoding information, thereby restoring the clarity and naturalness of the image. The artifacts removal feature in audio/video enhancement services can effectively repair distortions introduced by encoding, improving the overall quality of the video. |

Diffusion Transformer Video Enhancement | The large model is an augmented model based on deep learning CNN, which can cover most videos for business scenarios, especially delivering excellent results in repairing severely degraded old videos. Using the diffusion large model for restoration allows training data to better align with scenarios of vintage footage, avoiding poor detail restoration due to a model being too small and difficult to train. Meanwhile, building the enhancement model on top of existing powerful text-to-image and text-to-video foundation models enables full utilization of their various prior information of semantics and details for video quality restoration, achieving far superior results compared to conventional methods. |

V. FAQs

How to Create an Audio/Video Enhancement Template

The system provides several preset enhancement templates for your selection. You can also create custom audio/video enhancement templates according to business needs, presetting different processing parameters for various application scenarios to facilitate subsequent reuse. You can create audio/video enhancement templates through the console and API. If you need to configure transcoding-related parameters, it is recommended to use the API to add an enhancement template. Click View Guide for details.

Does the Enhancement Feature Support Configuring Encoding-Related Parameters Such As Bitrate and GOP?

The enhancement feature supports configuring encoding-related parameters. Currently, when you create an enhancement template through the console, you can only modify the encoding standard, resolution, and frame rate. If you need to set more transcoding-related parameters, such as bitrate and GOP, you should use the API to create an enhancement template. Click View Guide for details.

How to Achieve the Best Enhancement Effect?

Audio/video enhancement includes features such as video artifacts removal, denoising, color enhancement, detail enhancement, face enhancement, and SDR2HDR. If you are still not satisfied with the results of the combination tests by yourself, directly contact us to obtain detailed configuration recommendations and perform advanced parameter tuning.

Billing Overview

Audio/video enhancement is implemented based on transcoding. Therefore, initiating an audio/video enhancement task will incur two charges: audio/video enhancement and audio/video transcoding (standard transcoding or TSC transcoding). For details, see Audio/Video Enhancement Billing.